Summer School on Cyber-Physical Systems - 2014 Edition - Program

General Info Application & Registration Program Venue Hotel Social Event Slides Videos

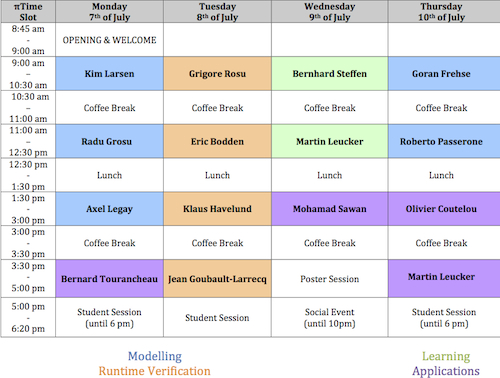

Time Table

Main Titles and Abstracts

On optimizing runtime monitors, both statically and dynamically

Eric Bodden, Fraunhofer SIT, TU Darmstadt and EC SPRIDE

We will discuss Clara, a static-analysis framework for partially evaluating finite-state runtime monitors at com- pile time. Clara uses static typestate analyses to automatically convert any AspectJ monitoring aspect into a residual runtime monitor that only monitors events triggered by program locations that the analyses failed to prove safe. If the static analysis succeeds on all locations, this gives strong static guarantees. If not, the efficient residual runtime monitor is guaranteed to capture property violations at runtime. Researchers can use Clara with most runtime-monitoring tools that implement monitors through AspectJ aspects. In this tutorial, we will show how to use Clara to partially evaluate monitors generated from trace- matches and using JavaMOP. We will explain how Clara’s analyses work and which positive effect they can have on the monitor’s implementation.

In addition we will briefly discuss a recent piece of work in constructing prm4j, a novel and fully reusable Java library for efficient runtime monitoring. It is quite challenging to make such a library generic and efficient at the same time. This is why prm4j generates some specialized, efficient data structures on-the-fly, at the time the user registers the monitored property with the library. Opposed to systems such as JavaMOP or Tracematches, the specialization does not generate code, however, but rather parameterizes generic data structures directly on the heap.

Sensor Networks for industrial usage

Olivier Coutelou, Schneider Electric, France

Internet of Things and connected objects become key words for Schneider Electric R&D and Innovation teams. Wireless sensors and sensor networks bring a lot of values such as easy deployment, flexibility, mobility, at very competitive cost. Therefore as a natural trend, more and more Schneider Electric offers will take benefice of connected sensors in the future.

After a short presentation of “ZigBee Green Power” which is our chosen very efficient wireless protocol at the sensors level, two examples will be described:

- Energy Monitoring of Machines in industrial factories.

- Energy and Electrical measurements dedicated to MV/LV substation in a Smart Grid environment.

At the end of the presentation, two demos will be done:

- One to show simple self powered sensors allowing energy estimation.

- The other one in order to demonstrate that accurate electrical measurements are possible by using self powered sensors

Reachability Analysis of Hybrid Systems

Goran Frehse, University of Grenoble

Hybrid systems describe the evolution of a set of real-valued variables over time with ordinary differential equations and event-triggered resets. Set-based reachability analysis is a useful method to formally verify safety and bounded liveness properties of such systems. Starting from a given set of initial states, the successor states are computed iteratively until the entire reachable state space is exhausted. While reachability is undecidable in general and even one-step successor computations are hard to compute, recent progress in approximative set computations allows one to fine-tune the trade-off between computational cost and accuracy. In this talk we give an introduction to reachability analysis and present some of the techniques with which systems with complex dynamics and hundreds of variables have been successfully verified.

Bio:

Goran Frehse is an assistant professor of computer science at Verimag Labs, University of Grenoble, France. He holds a Diploma in Electrical Engineering from Karlsruhe Institute of Technology, Germany, and a PhD in Computer Science from Radboud University Nijmegen, The Netherlands. He was a PostDoc at Carnegie Mellon University from 2003 to 2005. Goran Frehse developed PHAVer, a tool for verifying Linear Hybrid Automata, and is leading the ongoing development of SpaceEx, a verification platform for hybrid systems.

Hybrid Systems: A Crash Course

Radu Grosu, Cyber-Physical Systems Group, Vienna University of Technology

Aim

The technological developments of the past two decades have nurtured a fascinating convergence of computer science and electrical, mechanical and biological engineering. Nowadays, computer scientists work hand in hand with engineers to model, analyze and control complex systems, that exhibit discrete as well as continuous behavior. Examples of such systems include automated highway systems, air traffic management, automotive controllers, robotics and real-time circuits. They also include biological systems, such as immune response, bio-molecular networks, gene- regulatory networks, protein-signaling pathways and metabolic processes.

The more pervasive and more complex these systems become, the more is the infrastructure of our modern society relying on their dependability. Traditionally however, the modeling, analysis and control theory of discrete systems is quite different from the one of continuous systems. The first is based on automata theory, a branch of discrete mathematics, where time is typically abstracted away. The second is based on linear systems theory, of differential (or difference) equations, a branch of continuous mathematics where time is of essence. This course is focused on the principles underlying their combination. By the end of this course the students will be provided with detailed knowledge and substantial experience in the mathematical modeling, analysis and control of hybrid systems.

Subject

Hybrid automata as discrete-continuous models of hybrid systems. Executions and traces of hybrid automata. Infinite transition systems as a time-abstract semantics of hybrid automata. Finite abstractions of infinite transition systems. Language inclusion and language equivalence. Simulation and bisimulation. Quotient structures. Approximate notions of inclusion and simulation. State logics, and model checking. Partition-refinement and model checking within mu-calculus. Classes of hybrid automata for which the model-checking problem is decidable. Modern overapproximation techniques for reachability analysis.

Program your own RV system

Klaus Havelund, NASA JPL

The goal of the formal methods research field is to develop theories and techniques for demonstrating programs correct with respect to formalized specifications. The problem is unfortunately undecidable in general. Even practical and useful approximations are hard to achieve. Runtime verification (RV) is a discipline that, in part, just focuses on checking single execution traces against formalized specifications, hence a more scalable solution, but obviously with less coverage. The technique can be used during testing or during deployment, in both cases either online as the system executes, or offline by analyzing generated log files. The number of RV systems being developed by the research community is steadily growing as a result of exploring expressiveness and elegance of notations, as well as efficiency of algorithms, in particular when dealing with data parameterized events. These systems are usually complex and cannot be understood without intense study. In this talk we shall demonstrate how to program an RV system in very few lines of code, as an internal DSL (Domain Specific Language) in the functional object-oriented programming language Scala. An internal DSL is essentially an API in the host programming language. Scala is convenient for developing such internal DSLs, offering convenient syntax for API use. The system is expressive, elegant and reasonably efficient. The internal DSL will be compared to a similar external DSL (a stand-alone language with its own parser) with comparable language constructs, developed to explore indexing algorithms for efficient monitoring.

Bio. Dr. Klaus Havelund is a Senior Research Scientist at NASA's Jet propulsion Laboratory's (JPL's) Laboratory for Reliable Software (LaRS). Before joining JPL in 2006, Klaus spent eight years at NASA Ames Research Center, California. He has many years of experience in research and application of formal methods and rigorous software development techniques for critical systems. This includes topics such as programming language semantics, specification language design, theorem proving, model checking, static analysis (developing coding standards) and dynamic analysis. His current main focus of interest is on expressive specification notations for dynamic analysis and their integration with high-level programming languages. Klaus earned his PhD in Computer Science at the University of Copenhagen, Denmark, which in part was carried out at Ecole Normale Superioeure in Paris, France, followed by a post-doc at Ecole Polytechniqiue, Paris.Statistical Model Checking of Stochastic Hybrid Systems using UPPAAL-SMC

Kim Guldstrand Larsen, Aalborg University, Denmark

Timed automata, priced timed automata and energy automata have emerged as useful formalisms for modeling a real-time and energy-aware systems as found in several embedded and cyber-physical systems. Whereas the real-time model checker UPPAAL allows for efficient verification of hard timing constraints of timed automata, model checking of priced timed automata and energy automata are in general undecidable -- notable exception being cost-optimal reachability for priced timed automata as supported by the branch UPPAAL Cora. These obstacles are overcome by UPPAAL-SMC, the new highly scalable engine of UPPAAL, which supports (distributed) statistical model checking of stochastic hybrid systems with respect to weighted metric temporal logic.

The lecture will review UPPAAL-SMC and its applications to a range of to a range of real-time and cyber-physical examples including schedulability and performance evaluation of mixed criticality systems, modeling and analysis of biological systems, energy-aware wireless sensor networks, smart grids and energy aware buildings and battery scheduling. Also, we shall see how other branches of UPPAAL may benefit from the new scalable simulation engine of UPPAAL-SMC in order to improve their performance as well as scope in terms of the models that they are supporting. This includes application of UPPAAL-SMC to counter example generation, refinement checking, controller synthesis, optimization, testing and meta-analysis.

Kim Guldstrand Larsen is a full professor in computer science and director of the centre of embedded software systems (CISS). He received his Ph.D from Edinburgh University (Computer Science) 1986, is an honorary Doctor (Honoris causa) at Uppsala University 1999 and at Normal Superieure De Cachan, Paris 2007. He has also been an Industrial Professor in the Formal Methods and Tools Group, Twente University, The Netherlands. His research interests include modeling, verification, performance analysis of real-time and embedded systems with application and contributions to concurrency theory and model checking. In particular since 1995 he has been prime investigator of the tool UPPAAL and co-founder of the company UP4ALL International. He has published more than 230 publications in international journals and conferences as well as co-authored 6 software-tools, and is involved in a number of Danish and European projects on embedded and real-time systems. His H-index (according to Harzing's publish or perish, January 2012) is 63 (the highest in Denmark. He is life-long member of the Royal Danish Academy of Sciences and Letters, Copenhagen, and is member of the Danish Academy of Technical Sciences as well as member of Acedemia Europeae. Since January 2008 he has been member of the board of the Danish Independent Research Councils, as well as Danish National Expert for the European ICT-program.

OrchIDS: on the value of rigor in intrusion detection

Jean Goubault-Larrecq, ENS Cachan

OrchIDS is an intrusion detection system developed at LSV (ENS Cachan, INRIA, CNRS) that has some unique features: it detects complex attacks, correlating events through time, it is real-time, and interfaces with multiple sources of security events. The purpose of such a system is to detect attacks on computer systems and networks, and to counter them.

People in this area require practical solutions to concrete concerns. Security tools must be usable and give results on real, deployed systems and networks. This is definitely commendable. But this is also sometimes taken as an excuse for avoiding rigorous practices: rigorous definitions, proofs of algorithms, of optimality results.

I will attempt to convince you that we can have a rigorous approach to intrusion detection, and have a fast tool, too. In fact, part of the efficiency of Orchids stems, precisely, from the rigor we have put into it.

I will illustrate this with two specific cases (after having spent some time trying to convince you that computer security was important, using a few scary stories).

The first one is the Orchids core algorithm itself, which owes its efficiency to a well-crafted definition of what (not how) we wish to detect. It is then a theorem that the algorithm (the "how") really implements this definition. And we also obtain nice optimality results on the way.

The second one is an Orchids plug-in, NetEntropy, which classifies network flows as random/encrypted/compressed or not. This is useful to detect some hacked network traffic in difficult (cryptographic) situations. I will show how mathematics (statistics, here) is instrumental in evaluating the right confidence intervals. The result is surprising: NetEntropy detects subversion in situations that are so undersampled that commonsense would tell you one cannot detect anything.

Learning Automata with Applications in Verification

Martin Leucker, University of Luebeck

In this lecture, we give an overview of techniques for learning automata. Starting with Biermann’s and Angluin’s algorithms, we describe some of the extensions suitable for specialized or richer classes of automata and discuss implementational issues. Furthermore, we survey their recent application to verification problems.

From specifications to contracts

Roberto Passerone, Universita' degli Studi di Trento

The specification of a system or a component is usually expressed in terms of what the properties that the system in question should satisfy, and/or what it should do. In the last decade, a new form of specification has emerged which defines both the properties of the system as well as its assumptions on the context in which the system is used. These specifications take the name of interfaces or contracts. In this talk we will review the notion of a specification, and how it can be converted into a contract. We will analyze the role of the assumptions, and introduce the operators that are used to combine contracts through parallel composition and conjunction. Next we will discuss relationships between contracts that define their refinement and compatibility, and between a contract and an implementation to check its compliance. We then give examples of practical interface and contract models.

Roberto Passerone is assistant professor of Electrical Engineering at the University of Trento, Italy. Before that, he was research scientist at Cadence Design Systems, Berkeley, California. His research interests are in methodologies for system level design, integration of heterogeneous systems, and the design of wireless sensor networks. Roberto received his PhD from the University of California, Berkeley.

Monitoring and Predicting with RV-Monitor and RV-Predict.

Grigore Rosu, University of Illinois at Urbana-Champaign

RV-Monitor and RV-Predict are two recent runtime verification systems developed by Runtime Verification, Inc. (http://runtimeverification.com), which incorporate and significantly extend runtime verification technology developed at the University of Illinois at Urbana-Champaign, such as JavaMOP and jPredict. This talk will present these systems in detail. RV-Monitor is an efficient monitoring library generator for parametric properties. A novel implementation of JavaMOP using RV-Monitor is also available. RV-Predict is a concurrency bug detector/predictor, which generates causal models expressed using constraints from program executions and then solves those using constraint solvers, e.g., Z3. The technique underlying RV-Predict is mathematically proved to generate the maximal causal model for any given execution, that is, no other dynamic detector/predictor can find more concurrency bugs analyzing the same execution trace.

Challenges of Wirelessly Managing Power and Data for Implantable Bioelectronics

Mohamad Sawan, Polytechnique Montreal

Emerging implantable biosystems (IBS) for diagnostic and recovery of neural vital functions are promising alternative to study neural activities underlying cognitive functions and pathologies. Following a summary of undertaken IBS projects (neuroprosthesis and Lab-on-chio devices) dealing with spike an ion based neurorecording and neurostimulation, this talk covers the architecture of typical device and employed main building blocks, with a focus on the RF parts including both the recovery of energy to power up used IBS and the bidirectional data interface for its real-time operation. Due to numerous health limits, currently employed techniques to harvest energy are not convenient for bioengineering applications. Among these scavenging energy methods such as cantilevers movement, structure vibration, temperature, and bio-energy based techniques (Action-potentials, Glucose, Enzymes,..), all remains inefficient. Also, rechargeable batteries and other electrochemical energy converters, electricity/heat generation from reaction of Hydrogen and Oxygen are also not convenient for security issues. The energy transfer techniques requiring transcutaneous RF inductive powering are the choice and will then be elaborated. Attention to multi-technology use facilitating integration in chips of required high-voltage low-power RF front-end will be described. In addition to well-established inductive links, facts impacting on power efficiency and reliability such as signal conditioning, active rectifiers, various types of DC/DC converters, voltage regulators, custom integrated circuitries, calibration techniques, multidimensional implementation challenges (power management, low-power consumption circuits, high-data rate communication modules, small-size, low-weight and reliable devices), and custom integrated circuitries will be described.

Mohamad Sawan received the Ph.D. degree in 1990 in EE Dept., from Sherbrooke University, Canada. He joined Polytechnique Montréal in 1991, where he is currently a Professor of microelectronics and biomedical engineering. Dr. Sawan is a holder of a Canada Research Chair in Smart Medical Devices, he is leading the Microsystems Strategic Alliance of Quebec, and is founder of the Polystim Neurotechnologies Lab. Dr. Sawan is founder and cofounder of several int’l conferences such as the NEWCAS and BIOCAS. He is also cofounder and Associate Editor (AE) of the IEEE Trans. on BIOCAS, he is Deputy Editor-in Chief of the IEEE TCAS-II, and he is Editor and AE, and member of the board of several int’l Journals. He is member of the board of Governors of IEEE CASS. He published more than 600 peer reviewed papers, two books, 10 book chapters, and 12 patents. He received several awards, among them the Medal of Merit from The President of Lebanon, the Queen-Elizabeth II Diamond Jubilee Medal, and the Bombardier and Jacques-Rousseau Awards. Dr. Sawan is Fellow of the IEEE, Fellow of the Canadian Academy of Engineering, and Fellow of the Engineering Institutes of Canada. He is also “Officer” of the National Order of Quebec.

Active Automata Lerning: From DFA to Interface Programs and Beyond

Bernhard Steffen, TU Dortmund

Web services or other third party or legacy software components which come without code and/or appropriate documentation, are intrinsically tied to the modern an increasingly popular orchestration-based development style of service-oriented solutions. [Active] automata learning has shown to be a powerful means to overcome the perhaps major drawback of these components, their inherent black box character. The success story began a decade ago, when its application led to major improvements in the context of regression testing. Since then, the technology has undergone an impressive development, in particular concerning the aspect of practical application.

The talk will review this development, while focussing on the treatment of data, the major source of undecidability, and therefore the problem with the highest potential for tailored, application-specific solutions.

In the first practical applications of active learning, data were typically simply ignored or radically abstracted. In the meantime, extension to data languages have been developed, which led to the introduction of more expressive models like the so-called register automata. They are able to faithfully represent interface programs, i.e. programs describing the protocol of interaction with components and services. We will illustrate along a number of examples that they can be learned rather efficiently, and that their potential concerning both increased expressivity of the model structure and scalability is high.

The Internet of Things (IoT) Energy Consumption Issues

Bernard Tourancheau, INP Grenoble

The Internet of Things (IoT) is the new IT paradigm for the 2010's decade. It promises a huge growth of the number of interconnected devices, more than 50 Billions by 2020 says Ericsson studies. Regarding the (upcoming ?) energy crisis this will only happen if the devices' energy consumption is very low. Moreover, IoT devices are mostly supposed to be autonomous for years. Such a system supposes batteries and/or energy scavenging from the environment, which also bets for very low energy devices. In this talk, we present several aspects of our works that tries to reduce as much as possible the energy consumption of small devices' communications while involved in the IoT. This includes research efforts from the hardware design to the system architecture and lots of software optimizations, feasibility studies, as well as new protocols in the networking stack.

Bernard Tourancheau got a MSc. in Apply Maths from Grenoble University in 1986 and a MSc. in Renewable Energy Science and Technology from Loughborough University in 2007. He was awarded best Computer Science PhD by Institut National Polytechnique of Grenoble in 1989 for his work on Parallel Computing for Distributed Memory Architectures. Working for the LIP laboratory, he was appointed assistant professor at Ecole Normale Supérieure de Lyon in 1989 before joining CNRS as a junior researcher. After initiating a CNRS-NSF collaboration, he worked two and half years on leave at the University of Tennessee on a senior researcher position with the US Center for Research in Parallel Computation at the ICL laboratory. He then took a Professor position at University of Lyon in 1995 where he created a research laboratory and the INRIA RESO team, specialized in High Speed Networking and HPC Clusters. In 2001, he joined SUN Microsystems Laboratories for a 6 years sabbatical as a Principal Investigator in the DARPA HPCS project where he lead the backplane networking group. Back in academia he oriented his research on sensor and actuator networks for building energy efficiency at ENS LIP and INSA CITI labs. He was appointed Professor at University Joseph Fourier of Grenoble in 2012. Since then, he is developing research at the LIG laboratory Drakkar team about protocols and architectures for the Internet of Things and their applications to energy efficiency in buildings. He as well continue HPC multicores GPGPU's communication algorithms optimization research and renewable energy transition vs peak oil scientific promotion. He has authored more than a hundred peer-reviewed publications* and filed 10 patents**.

*http://scholar.google.com/citations?hl=en&user=1QNEeL8AAAAJ

**http://www.patentbuddy.com/Inventor/Tourancheau-Bernard/11609171#More

Student Titles and Abstracts

Session of Monday 7th July

Validation of an Interlocking System by Model-Checking

Andrea Bonacchi.

Railway interlocking systems still represent a challenge for formal verification by model checking: the high number of complex interlocking rules that guarantee the safe movements of independent trains in a large station makes the verification of such systems typically incur state space explosion problems.

We describe a study aimed to define a verification process based on commercial modelling and verification tools, for industrially produced interlocking systems, that exploits an appropriate mix of environment abstraction, slicing and CEGAR-like techniques, driven by the low-level knowledge of the interlocking product under verification, in order to support the final validation phase of the implemented products.

Power Contracts for Consistent Power-aware Design

Gregor Nitsche.

Since energy consumption is one of the most limiting factors for embedded and integrated systems, today’s microelectronic design demands urgently for power-aware methodologies for early specification, design-space exploration and verification of the designs’ power properties. Most often, these power properties are described by additional power models to extend the functional behavior by the power consumption and power management behavior. Since for consistency high-level estimation and modeling of power properties must be based on a more detailed low level power characterization, we currently develop a contract- and component-based design concept for power properties, called Power Contracts, to provide a formal link between the bottom- up power characterization of low-level system components and the top-down specification of the systems’ high-level power intent. Formalizing the validity constraints of power models within their assumptions, power contracts provide the corresponding formalized power behavior within their guarantees. Hence, being annotated with power contracts, the sub-components of a system can hierarchically be composed according to the concepts of Virtual Integration (VI), to derive the final system level power contract. Enabling the formal verification of the power models’ VI the power contracts allow for a sound and traceable bottom-up integration and verification of power properties.

Framework for Inter-Model Analysis of Cyber-Physical Systems

Ivan Ruchkin.

Cyber-physical systems are engineered using a broad range of modeling methods, from systems of ODEs to finite automata. Each modeling method comprises ways of representing a system (models) and reasoning about it (analyses). The growing diversity of CPS modeling methods creates a challenge of using models and analyses together: what implicit assumptions are models making about each other? In what order should analyses be composed? Incorrect answers to these questions may lead to modeling errors and, eventually, system failures.

In this talk I present a framework for inter-model analysis to deal with the challenge of multi-modeling. The framework allows its user to create architectural views as abstractions of models and specify contracts for analysis. Given views and contracts, the framework verifies model consistency, determines correct analysis execution sequence, an verifies that assumptions and guarantees of analyses hold.

Session of Tuesday 8th July

NCPS: a new approach for serious pervasive games

Eric Gressier Soudan.

In this short paper, we describe elements and key features of ongoing research for serious cyber games, the next generation of serious pervasive games. Serious Cyber Games are based on the Networked Cyber Physical Systems (NCPS) framework provided by SRI International Menlo Park.

Regression based Model of the Impedance Cardiac and Respiratory Signals for the Development of a Bio-Impedance Signal Simulator

Yar Muhammad.

A software implemented bio-impedance signal simulator (BISS) is proposed, which can imitate real bio-impedance phenomena for analyzing the performance of various signal processing methods and algorithms. The underlying mathematical models are built by means of a curve-fitting regression method. Three mathematical models were compared (i.e. polynomial, sum of sine waves, and Fourier series) with four different measured impedance cardiography (ICG) datasets and two clean ICG and impedance respirography (IRG) datasets. Statistical analysis (sum of squares error, correlation and execution time) implies that Fourier series is best suited. The models of the ICG and IRG signals are integrated into the proposed simulator.

In the simulator the correlation between heart rate and respiration rate are taken into account by the mean of ratio (5:1 respectively).

Self-healing Behaviour in Smart Grids

Pragya Kirti Gupta

Smart grids are considered to be the future of power systems. Solutions from the domain of Information and Communication Technology(ICT) are increasingly being introduced to better integrate a wide range of distributed energy resources. The objective is to make smart grid systems robust and highly dependable. We present our approach to make a self-healing smart grid node. A complete and consistent set of requirements defines the desired behaviour of the system. This desired system behaviour is continuously monitored to detect, identify and classify the fault. Since self-healing system must also be able to heal itself, it must be aware of the faults, nature of faults to perform recovery actions.

3 Examples of Cyber-physical Systems

Lotfi ben Othmane, Franhofer SIT, Germany

We give an overview on 3 projects on cyber-physical systems that we contributed to. (We provide mostly the public information on these projects.) The first contribution is in the context of the uCAN system, a system that collects data from connected vehicles in a data repository with the aim to make use of them, and it consists on developing a protocol for securely communicating connected vehicles with the remote office. The second contribution is in the context of the CarDemo system, a system for traffic management, and it consists on applying adaptive security technique (i.e., adapt the security mechanisms to the changes of assets) to automate the response of the traffic management system to physical threats—We will demonstrate the system. The third contribution is in the context of a “Partners voor Water” project—the produced system controls the usage of water by residents. Then, we provide the results of a survey we run about the security risks for connected vehicles, which are alarming. We conclude with the main lessons that we learned.

Session of Thursday 10th July

An Interval-Based Approach to Modelling Time

Gintautas Sulskus, Michael Poppleton and Abdolbaghi Rezazadeh.

We consider a formal modelling of multiple concurrent and interdependent processes with timing constraints. This paper is inspired by our work on modelling a cardiac pacemaker, a demanding real-time embedded application involving state based sequencing and timing aspects.

We introduce the approach, that is designed to be applicable for a wide range of systems and supports multiple complex interconnected timing constraints in concurrent systems.

In our work we showcase the capabilities of our pattern in a small example model based on our pacemaker case study.

Ρέα: Cyber-Physical Workflows

Barnabás Králik and Dávid Juhász

Ρέα (Rea or Rhea) is a workflow language for CPS programming [1]. The design of Ρέα is based on the paradigm of task-oriented programming (TOP). This paradigm has formerly been used to implement classical workflow-based systems using the rich tools and concepts provided by mature lazy functional programming languages. Ρέα strengthens this paradigm by making the notion of unstable values first class citizens of the framework. These represent the ever-changing values of signals coming from the physical environment.

Abstract Model Repair

George Chatzieleftheriou and Panagiotis Katsaros.

Given a Kripke structure M and a CTL formula f, such that f is not satisfied in M, the problem of model repair is to find a new model M' which satisfies f. State explosion problem is the main limitation of applying automated formal methods such as model checking in large systems. Most of the concrete model based repair solutions strongly depend on the size of the model. We present a a framework which uses abstraction to make model repair feasible for systems with large state spaces.

Sign in

Sign in